The Famous Chinese Room Thought Experiment

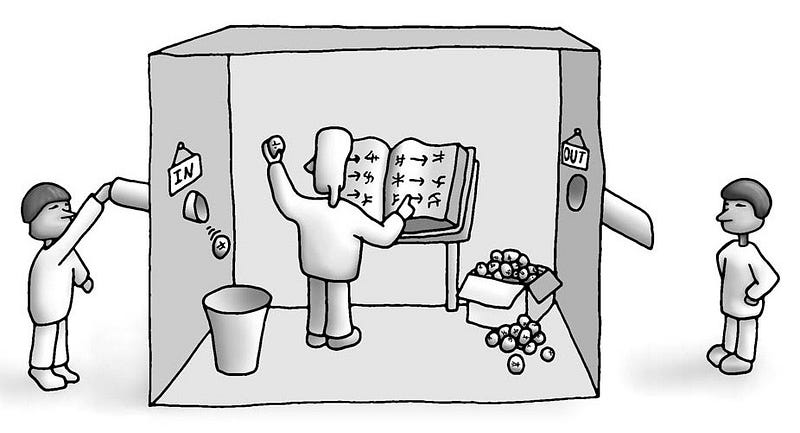

Imagine that a non Chinese speaker was led into a room full of Chinese symbols, together with a book of intricate rules as to how to manipulate those symbols.

Then, someone from outside the room would pass him a series of Chinese symbols, and following the rule book, he would select the appropriate symbols to send back out.

Here’s the important part. Unbeknownst to the man, the symbols being passed into the room were questions in Chinese, and by following the intricate rule book, the symbols being passed out were answers.

This man would pass the Turing Test- he would fool an observer into thinking he actually understands the Chinese being passed inside.

However, he himself has absolutely no understanding of Chinese.

Thus, if a computer were to be created that could “converse” in Chinese, would it actually understand the words it outputs? Does this thought experiment imply that computers can never truly understand anything?

This thought experiment was created by a Berkeley Philosopher named John Searle. Here is his explanation of the experiment:

“The point of the argument is this: if the man in the room does not understand Chinese on the basis of implementing the appropriate program for understanding Chinese then neither does any other digital computer solely on that basis because no computer, qua computer, has anything the man does not have.”

To be clear, he doesn’t mean that a computer can never understand. He clarified later:

To put this point slightly more technically, the notion “same implemented program” defines an equivalence class that is specified independently of any specific physical realization. But such a specification necessarily leaves out the biologically specific powers of the brain to cause cognitive processes. A system, me, for example, would not acquire an understanding of Chinese just by going through the steps of a computer program that simulated the behavior of a Chinese speaker

Over the years, this thought experiment has been used to challenge the idea that computers can have a mind or consciousness.

Searle’s argument relies on the fact that computers only use syntactic rules — the format and structure, rather than semantic understanding — the knowledge of what they mean in relation to reality.

There have been many interpretations of this experiment, and an equal amount of arguments for and against the fact that computers will ever truly understand.

Personally, I don’t think this experiment shows that computers will never understand like humans. In fact, I think this just proves that they can.

The man inside is not capable of understanding language, but the room as a whole is.

Here’s the logic which I use to support that statement. We as humans can understand language, but individual neurons in the brain cannot understand anything. All they do is receive signals of certain strengths and then output signals of different strengths, much like the person in the box receives Chinese symbols and outputs certain Chinese symbols.

Each of the billion neurons in our brain act as a mathematical function that receives an input and then gives an output. Boiled down to it, our consciousness arises from these many functions interacting together. In the end, it must be determined by math.

And yet, we have consciousness, or at least say we do.

Therefore, a computer with the same math should be able to have the same level of understanding as us.

The debate goes on, and everyone has their own opinion on whether AI will ever gain consciousness, or be able to think.

In the end, only time will tell.

Sarvasv

If you enjoyed reading this far; We want to provide you with more valuable content possible. So hit us up on social media with your comments or suggestions, and we’ll get back to you. You can find us on FACEBOOK, TWITTER and INSTAGRAM. We’d love to connect!